Test automation reporting tools

Features to consider when looking for the best test automation reporting tool for your needs

24 Nov 2021

Let's talk about what to consider when deciding which test automation reporting tool to use for your project.

First a quick note on test automation. Test automation is critical for software engineering teams maintaining complex systems. Regression (breaking existing functionality) is not uncommon when implementing new changes or features. Automated tests make is possible to spot regression efficiently. Besides regression spotting, automated tests enable greater test coverage and more testing can be carried out by automation compared with even an army of human testers. Testing programmatically is increasingly the only way to test systems with highly complex parallel test scenarios and large data sets.

For any team maintaining systems that are mission critical to a business and taking quality seriously, test automation is important. Automated tests are often run ad hoc by request but usually teams setup jobs to run on a regular interval or run tests as part of their build and continious integration process. Having a way to manage test results data output by test automation systems becomes necessary and that is the point where you need to start considering reporting tools. If you don't have any automated tests yet, it's probably best to create a few tests and then start looking into test automation reporting dashboards and tools.

Why use a test automation reporting tool?

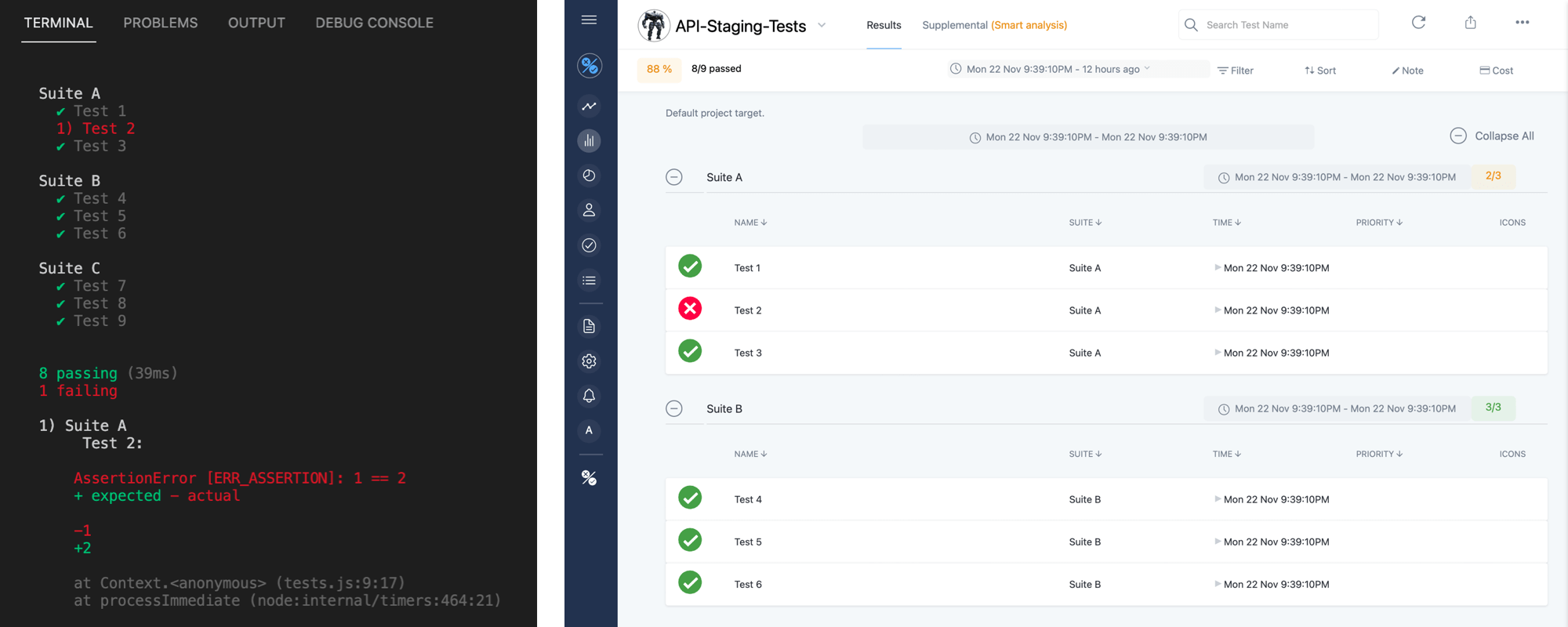

The obvious question to begin with is why use any test automation reporting tool at all. Why not just log test results out to the console and be done. This can be fine if your team is small and you don't have many tests and you run tests only ocassionally by manually triggering them. The problem with this approach becomes clearer when a product's feature set grows and testing needs to become increasingly sophisticated and the number of test cases gets larger. Parsing and making sense of the test output becomes increasingly challenging.

The scale of testing that automation can do means test results data output can be large. Managing large amounts of data generated from software that customers of a business use has become a specialized field, big data, with techniques developed in recent years to manage and analyze this data. When it comes to managing large amounts of test automation data, it's also important to consider how your team will handle this. One solution is with a test automation reporting tool.

Test automation reporting also serves as a feedback mechanism to your test developers and helps to keep tests up-to-date. Beyond scale and data processing, read on to understand what other benefits good test automation reporting tools provide and what to look for when considering a tool.

Maintaining a test automation reporting tool

You'll need to decide whether you want your team to maintain the test automation reporting tool or use a cloud/web-based service. This is a choice development teams commonly have to make for many development tools including code repositories, continuous integration systems, application servers and test device farms. Tesults is a web-based tool, there is no maintainence required and updates are applied automatically. That makes it easy to utilize for resource constrained teams and efficient to run for larger organizations because engineers don't to spend time maintaining the reporting system. Some teams will want to have an on-premise, self-hosted solution in which case an alternative to Tesults is required. If you've got enough resources you could consider maintaining a custom internal solution but for most teams and businesses this is unlikely to be cost effective.

Ease of integration and support for languages and test frameworks

The test cases your team maintains may change over time. Check that integrating with the reporting tool you are considering is straightforward not just for the programming language your team currently uses to write tests but also for languages and test frameworks they may start using soon. Some tools only provide APIs for C# or Java for example, check that the integration is easy for as many languages and frameworks as possible.

Is a standalone test automation reporting tool necessary?

If you use a non-coding test automation platform where all testing is defined within the platform itself, perhaps via a UI, and a reporting dashboard is integrated with that tool then you may not have a need for a standalone test automation reporting tool. Such systems exist for some types of UI testing. However, most teams still write coded tests for flexibility, power and control over what the tests do and will have a variety of front-end and back-end end-to-end tests and internal component tests that would benefit from having a consolidated test reporting tool. Examine your needs and decide whether a standalone test automation reporting tool makes sense for your team.

Consolidation of reporting from all test jobs

Take a look at how a tool supports consolidation of results reporting from all of your test jobs and check that adding a new test job at a later time is easy to do. Since test cases you maintain will likely increase overtime as your system grows more sophisticated your team will likely want to split test jobs up for specialization and add new ones for new features or products. The reporting tool should make it easy to add new test jobs and not constrain you with respect to how you want to setup reporting for different jobs. For example Tesults provides Targets for this purpose and adding a new test job is as simple as generating a new token from the menu. Check that any alternative tool offers a similar mechanism. Consolidating test results output from front-end clients, back-end apis and other areas in your system is important for quality analysis and it should not be a big task to add additional test jobs as your team needs them.

High-level and low-level insights

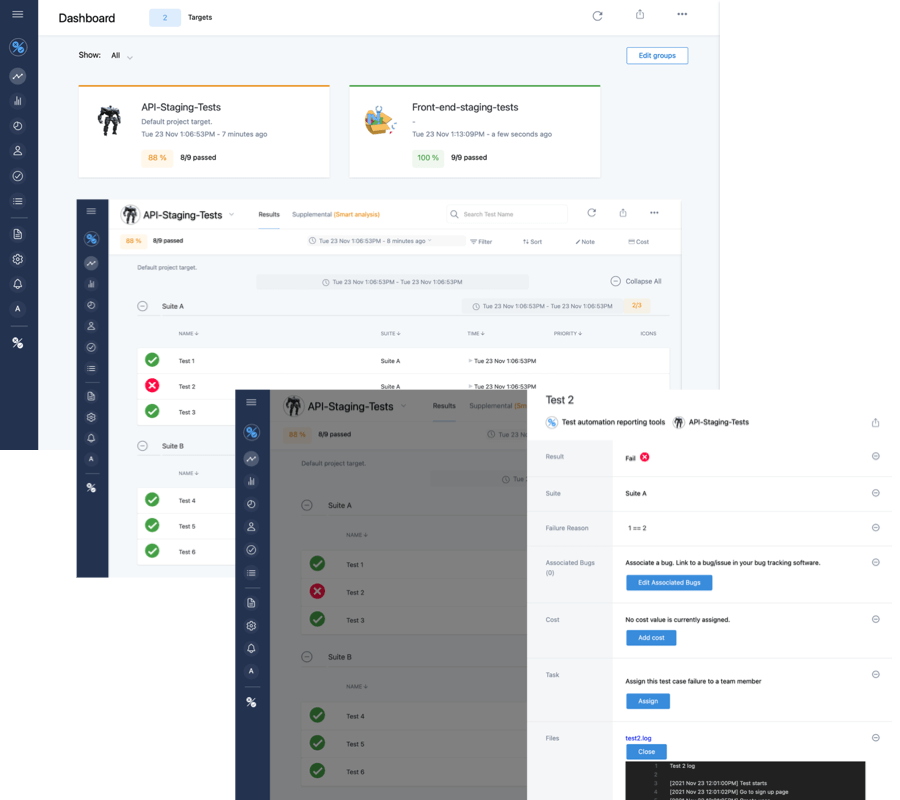

Making sense of large quantities of test data requires the ability to zoom out to see high level summary information and zoom in to see details like logs and failures for a specific test case. If you're a release manager reviewing results from several test jobs you probably want a high-level overview first to see which test job is highlighting failures and needs attention. From there it is useful to drill down to lists of test cases and then further to view details of an individual test case. Tesults provides the Dashboard, Results and Case view to handle these various levels of detail. Fortunately most test tools will provide similar views but do check to make sure you have the appropriate level of summary and detail that you require from the tooling you are considering.

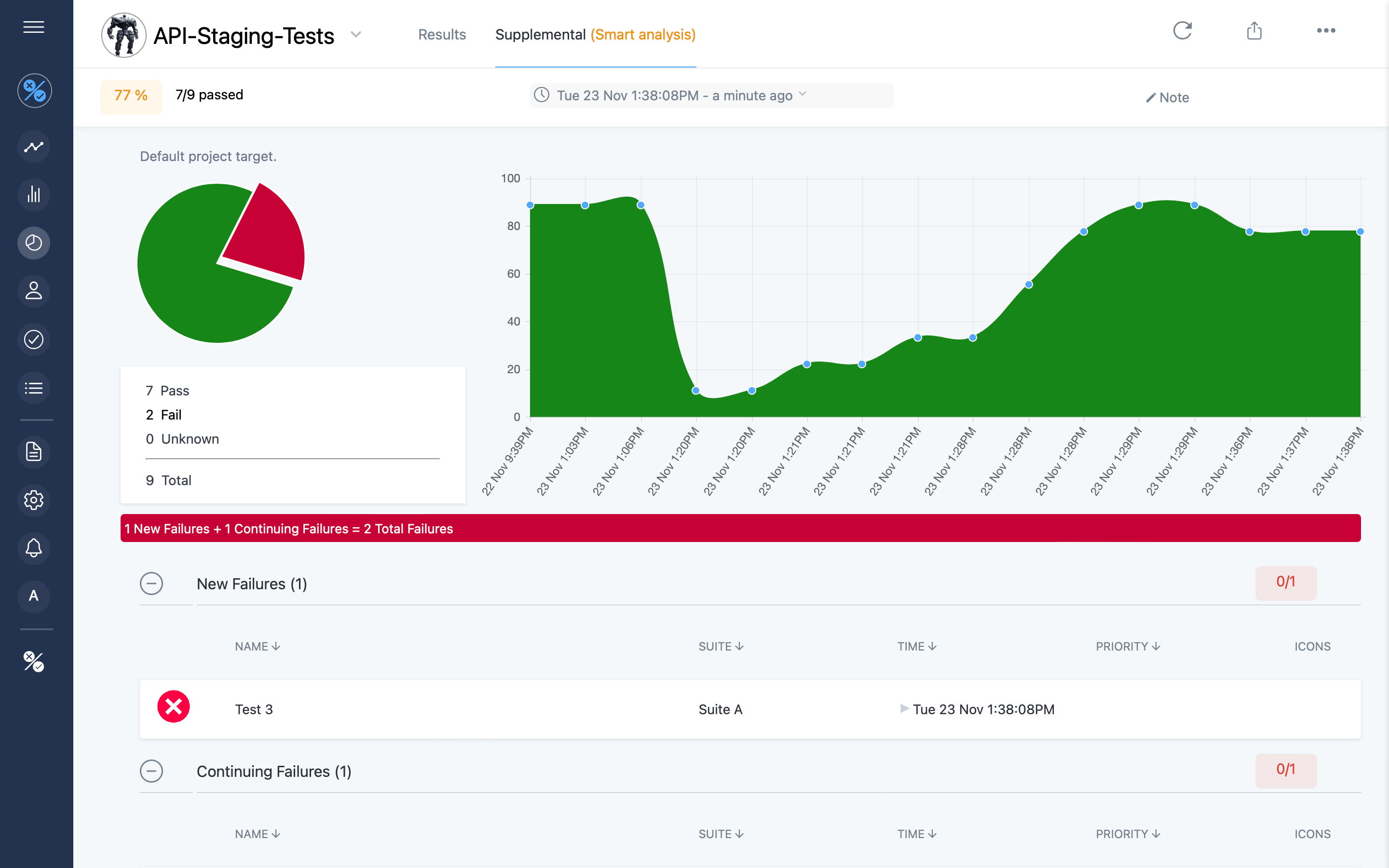

Automated analysis of test results

There will be times when a comprehensive view of all of the test cases and suites within a test job is important. Tesults provides the Results view for this purpose. However to save time when you have a large number of tests, automated analysis of the output becomes essential. The Supplemental view in Tesults carries out automatic analysis to enable an efficient review by the viewer. This includes automatically displaying new failures for the latest test run, failures that are carrying over from previous test runs, test cases that changed from failing to passing in the latest run and suspected flaky test cases where results are flipping between success and failure often. Examine the tool you are assessing to see if it provides automated analysis because without that your team will need to waste time on manual analyis.

Test data storage

Test automation can generate artifacts such as screenshots, logs, and other files. Check how storage and data management is handled when comparing tools. Will your team be responsible for managing a database for this or does the reporting software handle it. As a data point, Tesults stores and manages file data and test history. This can make it easy to identify when regression was introduced and look back through logs, images and other data as necessary.

Sharing test results, reports and notifications

Take a look at how easy it is to share a test report with team members and stakeholders when comparing reporting tools. Ideally it is simple to add a team member to the reporting software and share links. You may want to check the software supports SSO. Consider integrations with other applications as well. If your team primarily communicates via email then email notifications may be enough but if your team is remote or distributed then Slack or MS Teams notifications may be preferred. If you plan to use your test automation reporting tool to monitor production systems as well then PagerDuty and other alerting integrations are also useful.

Actioning test failures

Reviewing test results and output is important. Actioning is the next step. Consider who will be responsible for investigating a test case that has started to fail in the latest build deployed to the test environment. If the person viewing the report is the same person who will be investigating the bug, which may be the case, the next steps are clear. Sometimes though, the team member who came across the failure for the first time in the report may not be the person who will be investigating. In that case it is useful to be able to assign the failing test case to someone else to look at and to be notified about it. If this sort of actioning flow would be useful to your team check that the reporting software you are considering supports it. Tesults uses Tasks for this purpose.

Extracting results from the reporting tool

Consider how you will extract or export results from the reporting tool. Tesults provides an API for fetching results data. The main use case for this capability is for programmatic analysis of test results for decisions for deployment in a continuous integration or deployment system. Most reporting tools will have a solid user interface for analysis and viewing test results and that will be the primary way teams interact with results. If your team has no need for anything beyond viewing results within the reporting tool then this will not be a major concern. If it is important that you can programmatically examine results data or send results data elsewhere take a look at the APIs for the test automation reporting tool you are considering using.

Manual test case management and test runs

We are talking about test automation reporting tools but most teams conduct some manual testing too. While it is not necessary for a test automation reporting tool to handle manual test case management and you could use a separate tool for that, if your team is not already using something else, handling everything in one place can be useful. Check to see if the tool you are considering supports manual test case management. Tesults provides Lists for authoring and storing manual test cases. Tesults provides Runs to run a manual test run and assign test cases to team members. Having one place for software engineers and QA testers to review test output may be useful when testing for a release requires examining both automated and manual test output.

Security and audit

While test data is unlikely to include highly sensitive data, such as real customer data in production databases, the data should still be treated with the same level of security consideration. It is possible for attackers to find useful data within test output and it is possible to leak information about unreleased products to external entities if you do not take security seriously. If your team is self hosting a reporting tool, security will chiefly be the responsibility of the team. If you are considering a web-based/cloud solution you should ensure the provider meets the security requirements your team expects. This is something that should be done for all cloud based services of course, not just test automation reporting tools. Tesults encrypts data in transit and at rest, offers audit logs for projects and supports SSO. Check that any cloud based automation reporting tool you are considering does the same. With self hosted or on premise tools work with your internal IT and security team to ensure you are meeting appropriate safe guarding and security requirements.

Summary

There is a lot to consider when looking at test automation reporting tools. We hope the points discussed above serve as a checklist of points to consider for tooling options you are examining. We leave you with a list summarizing the key points discussed to help you with next steps.

- Why use a test automation reporting tool? Unless you have very few tests or are a tiny team the answer to this in most cases will be yes.

- Maintaining a test automation reporting tool. Do you want to to maintain a tool internally or use a cloud based service? The move to clould services for an increasing number of software tools is happening because of the frequent updates and improvements they provide along with zero maintenance and enormous flexibility. In some cases having an on premise tool may be a requirement.

- Ease of integration and support for languages and test frameworks. How is easy is to to integrate the test automation reporting tool with the programming language and/or test framework you use. If your team writes tests in multiple langauges check that it is possible to consolidate results from across your systems.

- Is a standalone test automation reporting tool necessary? If you don't have software engineers programming your tests and you create all of your tests within a UI based test platform that has an integrated dashboard you may not need a standalone test automation reporting tool. In most cases though teams do code their own tests or have a mixture of coded and no code tests and a standalone reporting tool makes sense.

- Consolidation of reporting from all test jobs. Look into whether it is easy to have individual reports for all of your test jobs and that if you need to add a new test job at any time that the process for doing so is straightforward.

- High-level and low-level insights. Ensure the tooling you are considering provides a high-level view of all test jobs and allows for drilling down easily into specific test runs and test cases.

- Automated analysis of test results. With large numbers of test jobs, test runs and test cases automatic analysis is critical to understanding the situation quickly. As an example, the Supplemental view in Tesults tells you which tests are failing in the latest test run, which ones have been resolved and are now passing, continuing failures and even provides indications around which test cases may be flaky and need investigation.

- Test data storage. Test artificats including images, logs and other files should be handled by the reporting tool.

- Sharing test results, reports and notifications. Sharing test reports with team members and stake holders should be straightforward. If your organization uses SSO ensure the tool supports it. You also want to check integrations your team may be interested in are supported such as for email, Slack, MS Teams and PagerDuty.

- Actioning test failures. Analysis and reviewing test results is great but what happens next? Check to see to see whether the reporting tool has a pipeline for actioning, e.g. assigning failing test cases to a team member. There may be a test that is impacted by a known issue and you may want to link a JIRA bug to it. In Tesults for example you can link bugs and uses Tasks to assign a failing test case to a team member.

- Extracting results from the reporting tool. The main objective of a test automation reporting tool is to present data for viewing and most teams will spend the majority of their time viewing results within a user interface. In addition to this however, consuming results data programmatically once it is stored within the reporting tool can be useful. Check to see if the tool you are considering makes this possible like the Tesults API does. Fetching results data can be useful as part of a continuous integration or deployment system to make a decision within the pipeline.

- Manual test case management and test runs. If your team needs to run manual tests as well as automated tests - most teams working on front-ends will have some manual testing - then it's useful if the test automation reporting tool can also handle test case management for manual tests. Tesults provides Lists and Runs for this purpose. Check any tool you are considering also has facilities to manage manual test case management.

- Security and audit. Ensure the test automation reporting tool meets appropriate security and audit requirements. For on premise solutions work with your IT and security team to ensure safeguards are in place. For cloud-based services, as with any cloud provider, check security polices are clear and appropriate.

Ajeet Dhaliwal writing for Tesults (tesults.com)