Swift test reporting

Installation

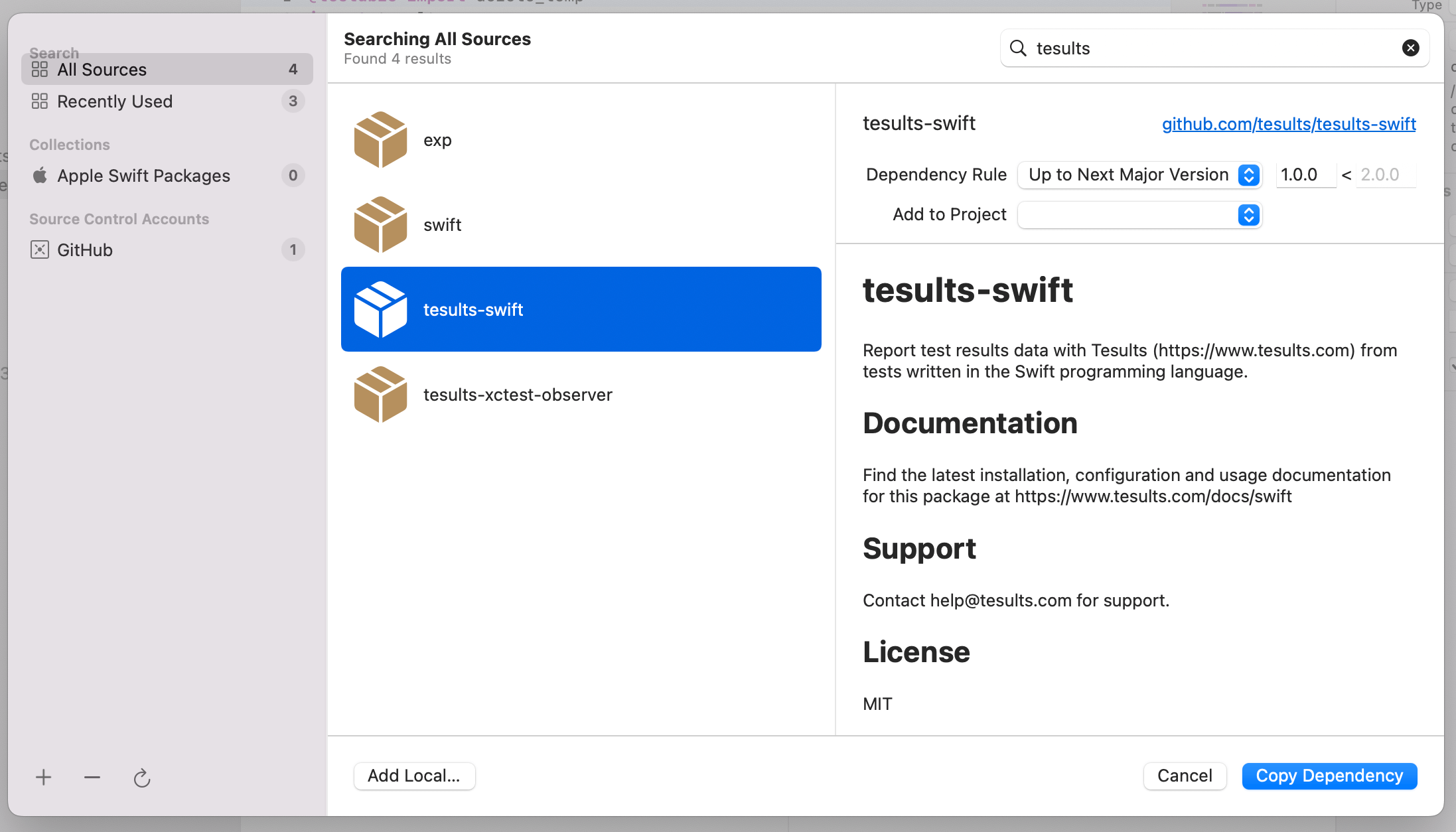

Add the tesults-swift package as a dependency to your project. Search for tesults-swift in Xcode to find the package.

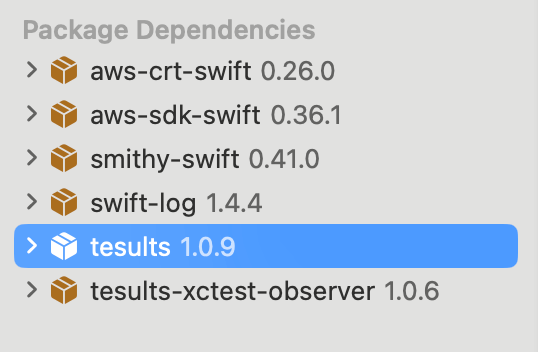

Ensure you install version 1.0.9 or higher. You can verify the version you have installed by checking package dependencies.

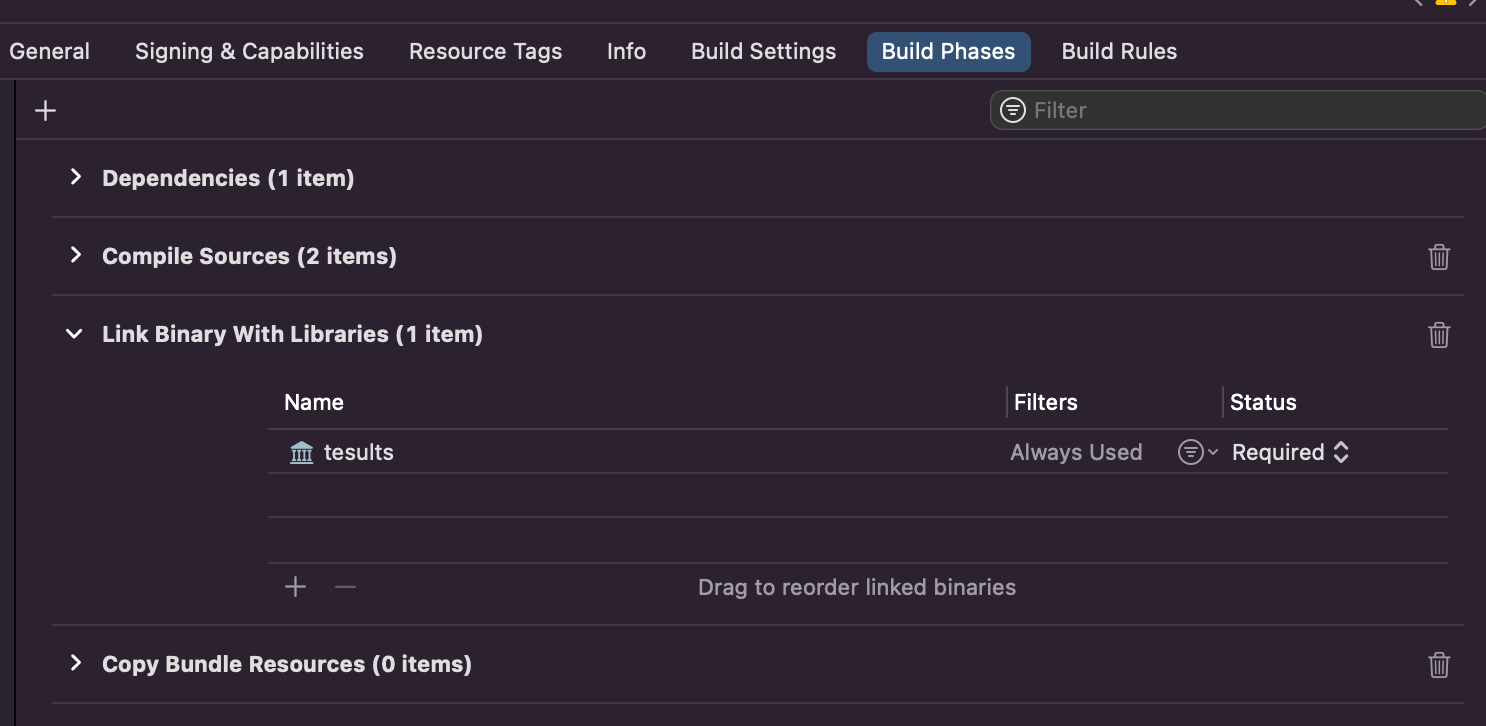

If you receive a build error about missing dependencies for tests, ensure the Tesults library is added to the Link Binary With Libaries section of Build Phases for the target:

Configuration

Make use of the package using an import statement in any file you need it:

import tesultsUsage

Upload results using the results function of the Tesults struct. This is the single point of contact between your code and Tesults.

Tesults().results(data: data)The results function is async so use await. The results function returns a ResultsResponse struct with four properties indicating success or failure:

print("Tesults results upload...")

let resultsResponse = await Tesults().results(data: data)

print("Success: \(resultsResponse.success)")

print("Message: \(resultsResponse.message)")

print("Warnings: \(resultsResponse.warnings.count)")

print("Errors: \(resultsResponse.errors.count)")The results function returns a ResultsResponse type that you can use to check whether the upload was a success.

- Value for key "success" is a Bool: true if results successfully uploaded, false otherwise.

- Value for key "message" is a String: if success is false, check message to see why upload failed.

- Value for key "warnings" is a [String], if count is not zero there may be issues with file uploads.

- Value for key "errors" is a [String], if "success" is true then this will be empty.

The data param passed to resultss is a Dictionary<String, Any?> containing your results data. Here is a complete example showing how to populate data with your build and test results and then upload it:

Complete example:// Required imports:

import tesults// Other imports:

import XCTest

@testable import app_under_test// Dictionary to hold test cases data.

var cases : [Dictionary<String, Any>] = []// Each test case is a Dictionary<String, Any> too. We use generic types rather than

// concrete helper classes so that if and when the Tesults services adds more fields

// you do not have to update the library.

// You would usually add test cases in a loop taking the results from the data objects

// in your build/test scripts. At a minimum you must add a name and result:

var testCase1 = Dictionary<String, Any>()

testCase1["name"] = "Test Case 1"

testCase1["result"] = "pass"// result value must be pass, fail or unknown

testCase1["suite"] = "Suite A"// Suite is usually the class name

testCase1["desc"] = "Test Case 1 description"

cases.append(testCase1);

var testCase2 = Dictionary<String, Any>()

testCase2["name"] = "Test 2"

testCase2["desc"] = "Test 2 description"

testCase2["suite"] = "Suite B"

testCase2["result"] = "pass"// (Optional) Add start and end time for test (in milliseconds since epoch):// In this example, end is set to 100 seconds later

testCase2["start"] = Date().timeIntervalSince1970*1000

testCase2["end"] = (Date().timeIntervalSince1970 + 100) * 1000// An optional duration can also be set if a start or end time is unavailable

testCase2["duration"] = 100 * 1000// (Optional) For a paramaterized test case:

testCase2["params"] = ["Param 1" : "Param 1 Value", "Param 2" : "Param 2 Value"]// (Optional) Custom fields start with an underscore:

testCase2["_Custom field"] = "Custom field value"

cases.append(testCase2);

var testCase3 = Dictionary<String, Any>()

testCase3["name"] = "Test 3"

testCase3["desc"] = "Test 3 description"

testCase3["suite"] = "Suite A"

testCase3["result"] = "fail"

testCase3["reason"] = "Assert fail in line 203 of example.swift."// Test failure reason// (Optional) For uploading files:

testCase3["files"] = ["/full/path/to/file/log.txt", "/full/path/to/file/screenshot.png"]// (Optional) For providing test steps for a test case:

testCase3["steps"] = [

[

"name":"Step 1",

"desc":"Step 1 description",

"result":"pass"

],

[

"name":"Step 2",

"desc":"Step 2 description",

"result":"fail",

"reason":"Failure reason if result is a fail"

]

]

cases.append(testCase3);// Prepare data for upload

var data = Dictionary<String, Any>()

data["target"] = "token"// The token for the target you wish to upload to

data["results"] = ["cases" : cases]// Test case data// Upload

print("Tesults results upload...")

let resultsResponse = await Tesults().results(data: data)

print("Success: \(resultsResponse.success)")

print("Message: \(resultsResponse.message)")

print("Warnings: \(resultsResponse.warnings.count)")

print("Errors: \(resultsResponse.errors.count)")

The target value, 'token' above should be replaced with your Tesults target token. If you have lost your token you can regenerate one from the config menu.

Test case properties

This is a complete list of test case properties for reporting results. The required fields must have values otherwise upload will fail with an error message about missing fields.

| Property | Required | Description |

|---|---|---|

| name | * | Name of the test. |

| result | * | Result of the test. Must be one of: pass, fail, unknown. Set to 'pass' for a test that passed, 'fail' for a failure. |

| suite | Suite the test belongs to. This is a way to group tests. | |

| desc | Description of the test | |

| reason | Reason for the test failure. Leave this empty or do not include it if the test passed | |

| params | Parameters of the test if it is a parameterized test. | |

| files | Files that belong to the test case, such as logs, screenshots, metrics and performance data. | |

| steps | A list of test steps that constitute the actions of a test case. | |

| start | Start time of the test case in milliseconds from Unix epoch. | |

| end | End time of the test case in milliseconds from Unix epoch. | |

| duration | Duration of the test case running time in milliseconds. There is no need to provide this if start and end are provided, it will be calculated automatically by Tesults." : "Duration of the build time in milliseconds. There is no need to provide this if start and end are provided, it will be calculated automatically by Tesults. | |

| rawResult | Report a result to use with the result interpretation feature. This can give you finer control over how to report result status values beyond the three Tesults core result values of pass, fail and unknown. | |

| _custom | Report any number of custom fields. To report custom fields add a field name starting with an underscore ( _ ) followed by the field name. |

Build properties

To report build information simply add another case added to the cases array with suite set to [build]. This is a complete list of build properties for reporting results. The required fields must have values otherwise upload will fail with an error message about missing fields.

| Property | Required | Description |

|---|---|---|

| name | * | Name of the build, revision, version, or change list. |

| result | * | Result of the build. Must be one of: pass, fail, unknown. Use 'pass' for build success and 'fail' for build failure. |

| suite | * | Must be set to value '[build]', otherwise will be registered as a test case instead. |

| desc | Description of the build or changes. | |

| reason | Reason for the build failure. Leave this empty or do not include it if the build succeeded. | |

| params | Build parameters or inputs if there are any. | |

| files | Build files and artifacts such as logs. | |

| start | Start time of the build in milliseconds from Unix epoch. | |

| end | End time of the build in milliseconds from Unix epoch. | |

| duration | Duration of the build time in milliseconds. There is no need to provide this if start and end are provided, it will be calculated automatically by Tesults. | |

| _custom | Report any number of custom fields. To report custom fields add a field name starting with an underscore ( _ ) followed by the field name. |

Go to the Configuration menu.

Select Build Consolidation.

When executing multiple test runs in parallel or serially for the same build or release, results are submitted to Tesults separately and multiple test runs are generated on Tesults. This is because the default behavior on Tesults is to treat each results submission as a separate test run.

This behavior can be changed from the configuration menu.

Build Consolidation

Click 'Build Consolidation' from the Configuration menu to enable and disable consolidation for a project or by target.

When build consolidation is enabled multiple test runs submitted at different times, with the same build name, will be consolidated into a single test run by Tesults automatically.

This is useful for test frameworks that run batches of test cases in parallel. If you do not have a build name to use for consolidation, consider using a timestamp set at the time the test run starts.

Build Replacement

When build consolidation is enabled, an additional option, build replacement can optionally be enabled too. Just as with build consolidation, when multiple test runs are submitted with the same build name the results are consolidated, but with replacement enabled, if there are test cases with the same suite and name received multiple times, the last received test case replaces an existing test case with the same suite and name. This may be useful to enable in situations where test cases are re-run frequently and you do not want new test cases to be appended and instead want them to replace older test cases. This option is generally best left disabled, unless test cases are often re-run for the same build and you are only interested in the latest result for the run.

Dynamically created test cases

If you dynamically create test cases, such as test cases with variable values, we recommend that the test suite and test case names themselves be static. Provide the variable data information in the test case description or other custom fields but try to keep the test suite and test name static. If you change your test suite or test name on every test run you will not benefit from a range of features Tesults has to offer including test case failure assignment and historical results analysis. You need not make your tests any less dynamic, variable values can still be reported within test case details.

Proxy servers

Does your corporate/office network run behind a proxy server? Contact us and we will supply you with a custom API Library for this case. Without this results will fail to upload to Tesults.