Flaky tests are hiding in plain sight

Identifying them is the first step towards a robust, reliable and valuable test suite

11 Sep 2019

Flaky tests can be prevalent in some areas of automated testing. API and purely programmatically accessible endpoint tests are usually free from this issue. UI and front-end tests have a greater tendency to exhibit the problem. One of the reasons for seeing flaky behavior often in UI tests is that programmatic manipulation of mechanisms and controls designed for humans rather than programs represent unique challenges over programmatic interfaces.

Humans respond to visual clues and other UI feedback in a way that is more difficult to make an automated test respond to. Programmatic interfaces in contrast often have a built in protocol around error handling. For example, if a button click makes a loading animation play for a short while before the next screen loads a human will know they must wait to proceed. If this animation plays out only sometimes and at other times the loading takes place immediately then it is possible that at the time the automated test was created the developer did not see the animation and coded the test to proceed to the next step immediately. The new test is committed, continuous integration creates a new build and runs the test, the test passes. Then a couple of days later, the test fails. The next day it passes again. Unwittingly a 'flaky test' has been introduced to the test suite, it's that easy.

Flaky tests should be fixed as soon as possible otherwise trust and reliability of an automated test suite, and by extension the value extracted, falls into question. Before a fix can take place though, the flaky test needs to be identified.

There are many benefits to storing old test results and one of them happens to have to do with identifying flaky tests. Looking at a single set of test results does not usually provide any clue that a particular failure is due to flaky behavior. Worse, you do not have any clues as to whether a particular pass is due to flaky behavior. A real issue with the application at test may be left unidentified. In most test suites, the number of test cases makes it difficult and prohibitively time consuming to identify flaky tests from a single test run.

The practical way to identify flaky tests is to store the results of each test run, and then on each new test run check whether a particular test case appears to have switched or 'flipped' between passing and failing a number of times over the previous test runs. When this is observed then a test case may potentially be flaky and further investigation should be carried out.

The importance of automated management in identification of flaky test cases grows as test suites grow because once you get into hundreds or thousands of test cases it becomes practically impossible to be familiar enough with individual test cases to remember or notice there may be flaky behavior.

Once trust in some test cases starts to become eroded, doubt can set in for the whole suite and so it is important to make this a priority. Again, store test results for every test run, perform analysis case by case on whether there has been result 'flipping' at a frequency and interval to suggest highlighting the case as potentially flaky is justified. Then assign the test case to yourself or someone else to fix it as soon as possible. Keep your test suite healthy, robust and highly valuable.

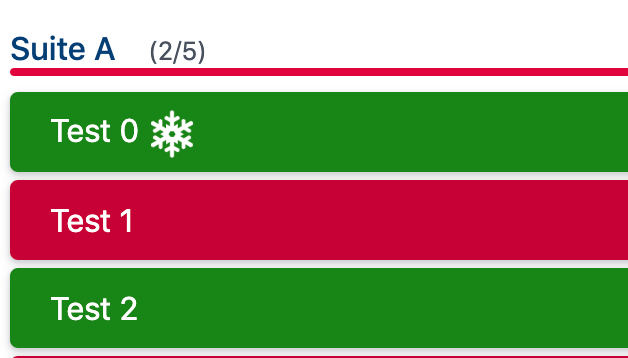

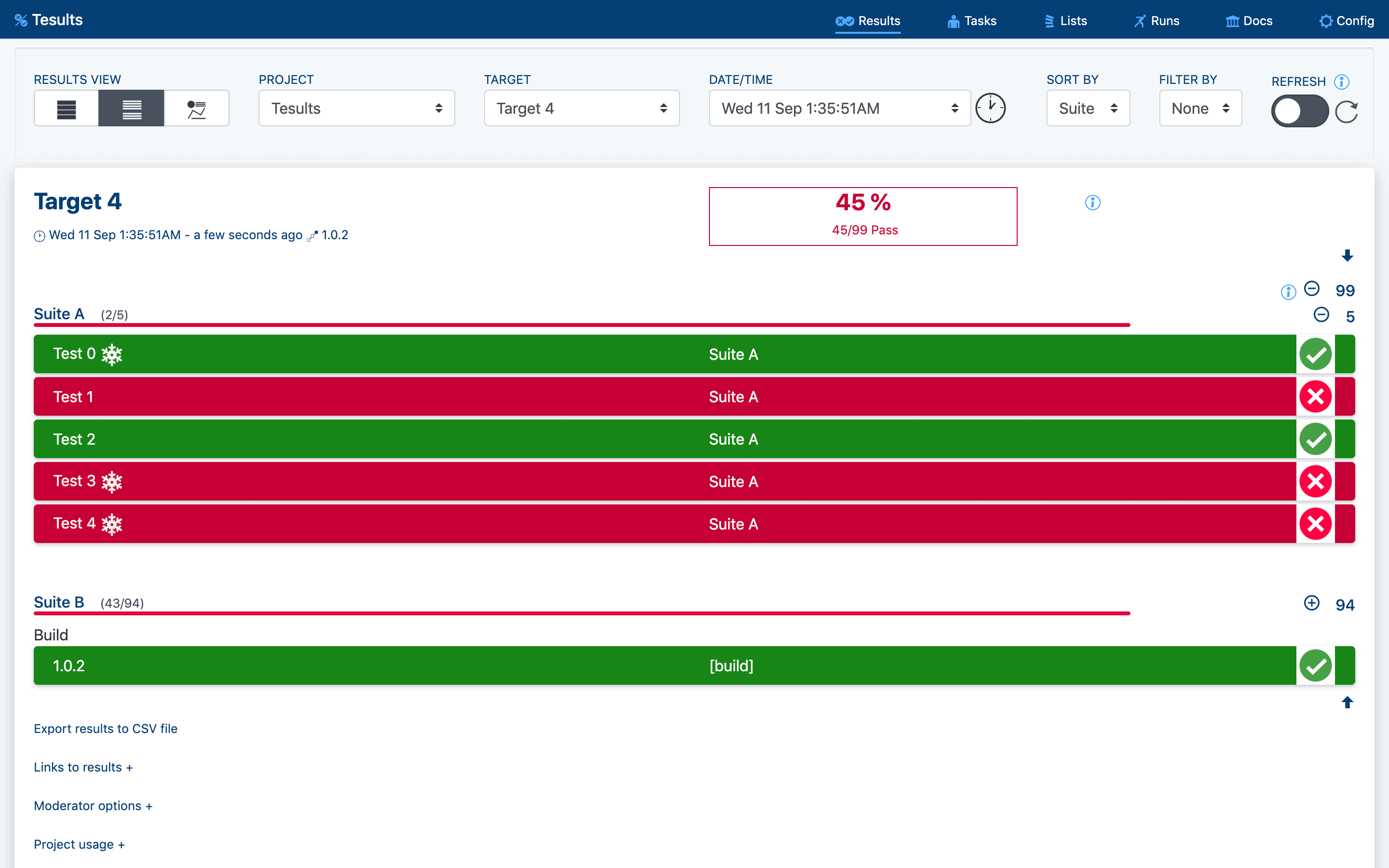

If you use Tesults to report results, this is all taken care of automatically. Individual test cases that may be flaky are marked with the 'flaky test indicator'. Here's an example:

Above, Test0 is potentially flaky.

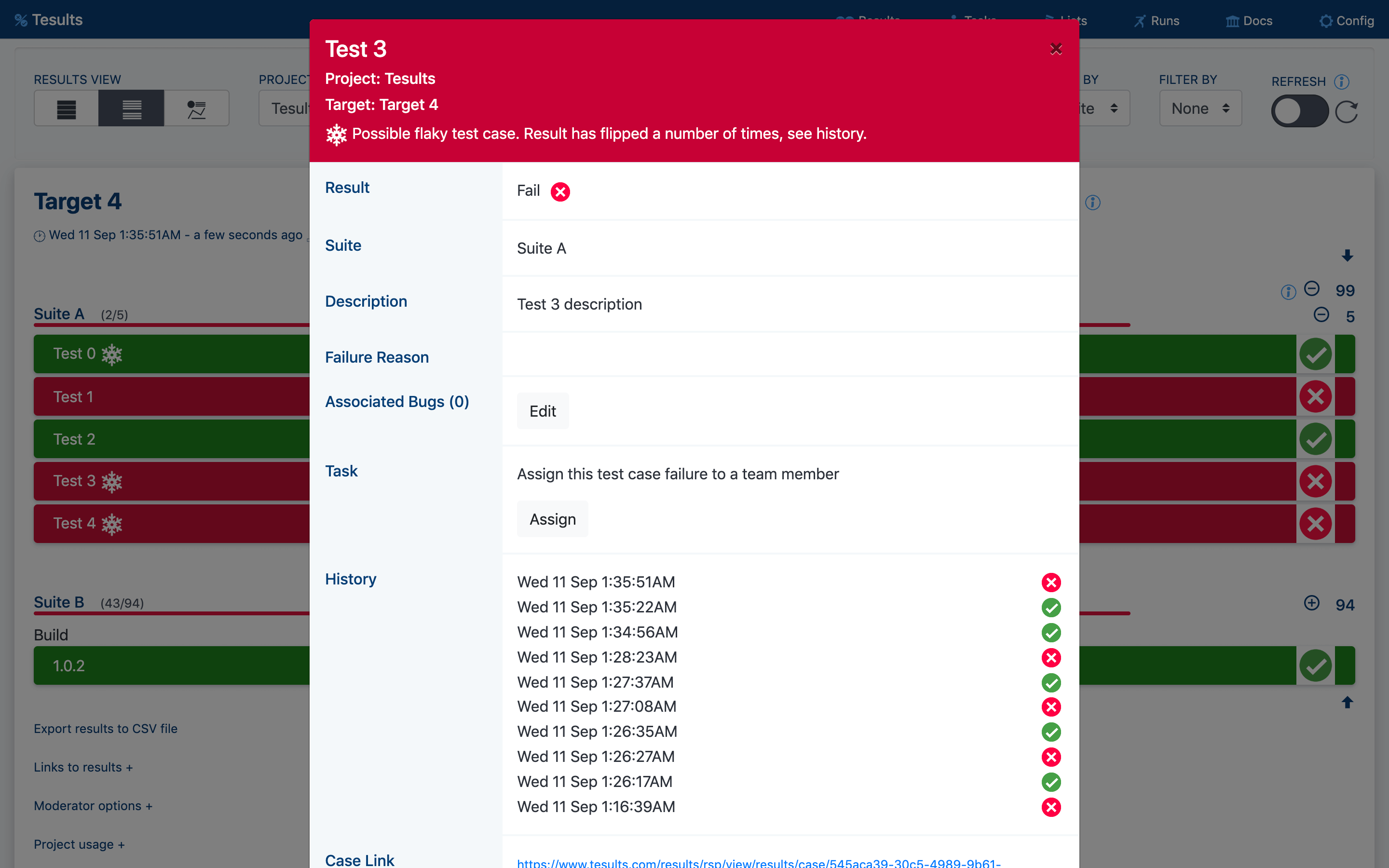

If you see a test case with the flaky test indicator present, open it to see case details. The case history will be displayed showing how often and when the test results changed between pass and fail.

An important point to make is that sometimes what appears to be a flaky test case is actually not a flaky test. The test case may be discovering that the application under test is flaky. This can be due to a range of issues and usually a nasty bug due to a race condition or perhaps a memory allocation issue. In this case it's still worthwhile highlighting a test case as flaky because it invites investigation and subtle bugs can be uncovered this way.

The good new is that once identified, a fix for a flaky test can often be straightforward. A common reason for flaky tests, especially for UI automated tests has to do with loading and timing problems. If driving a browser programmatically and using a library like Selenium, fixing a flaky test case due to an issue with not always finding an element in a page, perhaps because the page has not fully loaded yet, may just involve adjusting the max time to wait in the event of a failure to find an element. If an element is found right away the test continues right away. There is only ever a wait if it is not found immediately, this keeps test runs time efficient and can help resolve flaky tests due to network performance or connectivity.

Good luck handling flaky tests. Try not to view results in isolation, record historical results and utilize them for analysis. Keep your test suites robust!

- Ajeet Dhaliwal